This research presents a pipeline that transforms stereo music into multi-channel haptic feedback through AI-based track separation, specialized filtering, and spatial mapping on a vest, providing an immersive audio-haptic experience. Future work will focus on real-time processing and enhancing inclusivity for individuals with sensory impairments.

Introduction

we will reflect on the creation of haptic content. Just as the screen is useless without the image we want to display on it, our haptic devices need a signal to provide feedback to the user. Here, we will focus on creating a haptic signal for music haptization to provide a sensory experience when listening to music. We will experiment using Actronika’s Skinetic vest as haptic hardware. Hearing sound while feeling associated with haptic feedback is a common sensation during concerts (either loud pop concerts or more calm ones). Sometimes, the haptic sensations are very weak, and sometimes, rock kicks blast through the bones, but this haptic component is always a great part of the concert experience compared to listening to music with headphones. We will propose a processing pipeline to transform simple stereo music into a haptic experience, allowing users to feel the music even with headphones.

Constraints and Advantages of Haptic Signals

Like every other communication channel, haptics need a signal to give information to the user. In this context, a signal refers to a temporal sequence of one or more values that vary over time, which may be discrete or continuous. These values convey the information or message the device intends to communicate to the user. One good example is the sound signal, represented by one continuous value oscillating and thus forming the sound we hear. We can also think of the RAWimage format; this signal has multiple values, one for each picture’s pixel.

We will focus our research on vibrotactile haptics, as there can be multiple haptic vectors. Different methods are available to provide vibrotactile feedback; here is a non-exhaustive list:

- The electrodynamic vibrating actuators: The speaker is a typical example of an electrodynamic actuator. A permanent magnet interacts with a coil, which transfers its movement to a diaphragm. This diaphragm creates mechanical vibrations and, as a result, sound waves by changing the pressure of the surrounding air. If 5 Music to haptic 29 the diaphragm has a certain weight, any object touching it will perceive the mechanical vibration.

- Piezoelectric Actuators: Piezoelectric materials are relatively recent and continuously evolving. They are available in ceramic or polymer materials and have the property of deforming when an electric field is applied.

- Eccentric Mass Motors: This type of actuator rotates a mass whose center of gravity is offset from the center of rotation, producing a rotational acceleration around the axis of rotation.

Every feedback-providing method has its advantages and defaults. It can be feedback strength issues, power consumption, signal precision... We need to provide precise haptic feedback for our work here, but not necessarily a strong one. The hardware proposed is a vest composed of 20 electrodynamic vibrating actuators placed on the user’s torso. The signal is a 20-channel audio signal on the norm PCM16 to drive these actuators. This uncompressed format represents the amplitude values on a 16-bit depth, the standard bit depth for CD audio. The sample rate we used is 48KHz.

AI and Signal Processing for Music-Driven Haptic Feedback

Just as sound with images helps the user understand what is shownto him, haptic can improve the user’s perception of the music. In the rhythmic aspect of music, haptics reduces the minimum difference required for two distinct rhythms to be differentiated. Some experiments have been made to augment audio notifications (such as when pressing a key on a smartphone) and music with haptics on phones. Users tend to prefer having haptic feedback with the audio and are less demanding on the audio quality. On our hardware (the Skinetic vest), some work has been released for real-time audio to haptics, taking the sound input, filtering it and playing the filtered version on the vest. However, this algorithm does not spatialize the audio on the vest; every actuator plays the same signal.

Our work here will focus on separating the music’s sources (different groups of instruments), finding the best filters for each group of instruments, and spatializing the haptic on the vest.

The program has three main parts. First is the track separation: because each component of a song doesn’t need the same processing, we separate the music into four different tracks (drums need sharp and powerful haptics, whereas vocals need something more soft for example). Then, each stereo pair of tracks (bass left and bass right, for example) goes through a series of filtering and signal processing fine-tuned for each track. Finally, we merge our four haptized tracks into one 20-channel audio file, where the tracks are played in channels corresponding to their spatialization on the torsoe.

-- Tracks separation

The first step of our processing pipeline is to separate tracks. Music

comprises different groups of tracks; the most standard ones are drums,

bass, vocals and lead. The need for track separation comes from the

fact that haptic feedback should not feel the same between discrete and

continuous signals or signals of different frequencies.

To separate the tracks, we used an implementation of the Demucs music

source separation AI by Facebook-research with the latest model

Demucs (v4).

-- Filters

To translate the music into haptics, we used a series of filters. The main challenge of this process was to extract all the interesting features of the sound, even if they are on the low or high range of frequencies, and translate them into low-frequency signals with less audible components possible. The theoretical lowest frequency the human can hear is 20Hz, but in reality, we can go higher in frequencies without disturbing the user’s experience. Here, we will describe each filter and then specify which ones were used on each track.

- The most basic filters used were high-pass filters and low-pass filters. These filters are used to cut off unwanted frequencies in the signal. As their names say, the high-pass filter cut low frequencies, and the low-pass filter cut high frequencies. These two filters have the same parameters: the cut-off frequency (the frequency at which the filter starts taking action) and the order (the higher the order, the more sharp the filter is).

- We then have band-pass filters that can be seen as merging a lowpass and a high-pass filter. These filters have three parameters: the low cut-off, the high cut-off and the order.

- We also have a transient shaping filter. This filter is used to manipulate

the transient components of an audio signal independently

from its sustained elements. Transients are the initial peaks or

sudden changes in a sound, such as the attack of a drum hit, the

pluck of a string, or the consonants in speech. The principal step

of this filter is to detect the attacks ( where the amplitude of the

sound rapidly increases from silence -or a low level- to its peak

level).We first determine the attack samples by sampling the audio

into small chunks of a certain time, allowing us to determine if

there is an attack in this time window. We then use a high-pass

filter to detect sharp transients.

After that, we calculate the envelope where the volume is higher on the attack and lower on the rest. We then apply a gain based on the envelope max value and the filter’s intensity

. With these steps, the audio tracks have amplified attacks and attenuated sustain. - We then have a pitch-shift effect, shifting down or up the signal frequencies.

- At last, we have a Hilbert envelope calculation to extract the signal’s

envelope using the analytical signal. The analytical signal

is a complex signal composed of the original real signal and its

Hilbert Transform as the imaginary part. The envelope represents

the instantaneous amplitude (magnitude) of the signal over time,

which can be obtained by taking the magnitude of the analytical

signal:

𝐸𝑛𝑣𝑒𝑙𝑜𝑝𝑒(𝑡) = ||𝐴𝑛𝑎𝑙𝑦𝑡𝑖𝑐𝑎𝑙𝑆𝑖𝑔𝑛𝑎𝑙|| = sqrt(𝑥(𝑡) + (𝐻𝑖𝑙𝑏𝑒𝑟𝑡𝑇𝑟𝑎𝑛𝑠𝑓𝑜𝑟𝑚(𝑥(𝑡))))

These filters were applied in different orders and with different parameters for each of the four pairs of tracks.

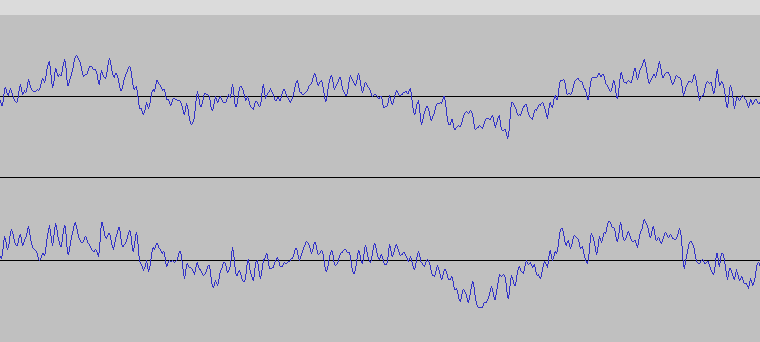

The bass track was first processed into a low-pass filter with a cut-off frequency of 80Hz and an order of 5. This first filter removes all artifacts from the track extractions and gives us a clean bass track. We then apply a transient-shaper with an intensity of 4 and an attack sample time of 4ms. This processing allows amplifying bass changes, making them more perceptible and increasing the track’s clarity in the case of plucked bass. We then apply a second low-pass filter with a cut-off frequency of 150Hz and an order of 7. This removes all potential artifacts created by the transient shaping filter.

The drums are first filtered into two sub-tracks. We filter once with a band-pass filter between 10Hz and 100Hz with an order of 4 to select the kicks.We then filtered the main track again with a band-pass filter between 200Hz and 1100Hz, with order 4 to select the snares. These two sub-tracks are then merged into one track by simple addition.We then apply a transient-shaper with an intensity of 8 and an attack of 3ms. We then apply a pitch shift of -12 steps (an octave) to bring back more frequencies into the haptic field (high snares and high hats). Finally, we applied a low-pass filter with a cut-off frequency of 150Hz and an order of 7 to remove the audible component of the track.

For the vocals processing, we started by shifting the pitch of -24 steps (2 octaves) to get the frequencies closer to the haptic field. We then apply a band-pass filter between 80Hz and 200Hz to select only the non-audible part of the track. Finally, we enhance the audio data dynamics by calculating the track’s envelope using a Hilbert transform. The envelope is then multiplied by an intensity. The track is then multiplied by the boosted envelope. The result is a track where differences between loud and silent parts are greatly increased.

For the remaining track, the lead,we first apply a pitch-shifting of -12 steps (-1 octave). Then, we apply a band-pass filter between 10Hz and 300Hz with an order of 4 to select only events that can be felt through haptics; we then apply the envelope multiplication using the Hilbert transform, just as in the vocal processing. We finish with a low-pass filter with a cut-off frequency of 200Hz and an order 7 to remove any audible artifacts.

After processing all these tracks, an amplification is applied. The value of this amplification is not the same for each track and can be changed depending on whether we want a track louder or not (it’s a fine-tuning option for the end-user).

-- Spatial Mapping

After processing the four pairs of tracks, we need to merge this into a single 20-channel wav file. The torsoe is quite a sensitive zone for haptics. With a spatial acuity of 3 to 1cm and it’s position close to the nervous system, we can create very distinguishable zones for each tracks. The different body parts and their particularities do not respond the same way to haptic stimuli. While soft body-parts such as the abdomen tend to resonate ans enhancing sharp haptic feedbacks, providing haptic stimuli near bones will propagate the vibration along the skeleton. The first spatial discrimination was stereo placement, tracks coming from the left-ear audio will be placed on the left of the user, same for the right-ear audio. Then, we will place the haptics feedback corresponding to their normal audio frequencies: Bass have a low frequency, their haptics will be placed on the bottom of the vest, the lead instruments are often high frequencies, their haptics will be placed on the top of the vest. Because unlike the music, all haptic tracks have mostly the same frequencies (the frequency panel of haptics before going into audible frequencies is very small), this second discrimination helps the user understand which haptics comes from wich part of the music. We then choose to spatialise the tracks like that:

- Drums: the drums were placed on the abdomen (left and right corresponding to the music’s left and right tracks). Drums are discrete and sharp haptics. Placing this type of haptics on a soft body area make the kicks resonate, increasing the strength of the feedback. Also, in concerts with big kicks such as rock or electronic music, the kick feels like it strikes from the scene, it comes from forward.

- Bass: the haptics from the bass are placed on the back. Bass are the root of the music, placing it on ther back allows the vibration to spread into the body through the spinal column. In concerts, bass haptics often comes through the ground, spreading in the body through the bones.

- Vocals: vocals haptics are the most modified haptic tracks. Because some music can have no vocals or moments with no vocals, we choose to place this tracks on the side of the torso, under the arms. This place do not feel empty when no vocals are played, but is very distinguishable when there are vocals in the music. With this location, the system works as great for instrumental musics as for musics with singers.

- Other: The remaining haptic tracks is places on the chest. Grouping all the different instruments that are on the middle to high-range frequencies of the music, this tracks are placed on the top of the vest. The rib cage spread a bit the vibrations, filling the space while making the link between the high-frequency drums and the kicks, and the lead instruments with the bass.

Discussion and FutureWork

The proposed solution processes audio files to generate haptic feedback through the application of track separation and specialized filtering methods. However, several limitations are evident in the current system. The primary area for improvement is processing time, as a non-real-time solution significantly reduces the system’s adaptability by requiring the entire recording to be completed before processing can begin. While filtering can be performed nearly in real time, similar to many music production software applications, the main challenge lies in developing a track separation algorithm that operates with minimal latency. Recent advancements in artificial intelligence have introduced models capable of real-time processing , which could enhance the functionality and responsiveness of this pipeline.

Moreover, although this research primarily targets entertainment applications, the underlying technology holds potential for promoting inclusion in artistic events. By providing an additional sensory modality, haptic feedback can enhance the experience for individuals with sensory impairments, such as those with hearing or visual disabilities, thereby increasing their immersion and engagement in artistic performances.

Bibliography

- Actronika (2024a) Plug & Play Audio-to-Haptics: Transform Your Media Experiences with Skinetic. Available at: https://www.skinetic.actronika.com/post/plug-play-audio-to-haptics-transform-your-media-experiences-with-skinetic (Accessed: 11 September 2024).

- Actronika (2024b) Skinetic: Immersive Haptics for VR and Gaming. Available at: https://www.actronika.com/skinetic (Accessed: 15 November 2024).

- Bernard, C., Monnoyer, J., Wiertlewski, M. and Ystad, S. (2022) ‘Rhythm Perception Is Shared Between Audio and Haptics’, Scientific Reports, 12(1), p. 4188. DOI: 10.1038/s41598-022-08152-w.

- Chang, A. and O’Sullivan, C. (2005) ‘Audio-Haptic Feedback in Mobile Phones’, CHI ’05 Extended Abstracts on Human Factors in Computing Systems, pp. 1264–1267. DOI: 10.1145/1056808.1056892.

- Défossez, A. (2021) ‘Hybrid Spectrogram and Waveform Source Separation’, in Proceedings of the ISMIR 2021 Workshop on Music Source Separation. [No page numbers available].

- Fletcher, M.D. (2021) ‘Can Haptic Stimulation Enhance Music Perception in Hearing-Impaired Listeners?’, Frontiers in Neuroscience, 15, p. 723877. DOI: 10.3389/fnins.2021.723877.

- Gunther, E., Davenport, G. and O’Modhrain, S. (2002) ‘Cutaneous Grooves: Composing for the Sense of Touch’, Journal of New Music Research, 32(4), pp. 369–381. DOI: 10.1076/jnmr.32.4.369.18856.

- Hance AI (2024) Hance 2.0: Realtime Stem Separation - Hello, Music Industry! Available at: https://hance.ai/blog/hance-2.0-realtime-stem-separation-hello-music-industry (Accessed: 12 November 2024).

- Paterson, J. and Wanderley, M.M. (2023) ‘Feeling the Future—Haptic Audio: Editorial’, Arts, 12(4), p. 141. DOI: 10.3390/arts12040141.

- Remache-Vinueza, B., Trujillo-León, A., Zapata, M., Sarmiento-Ortiz, F. and Vidal-Verdú, F. (2021) ‘Audio-Tactile Rendering: A Review on Technology and Methods to Convey Musical Information through the Sense of Touch’, Sensors, 21(19), p. 6575. DOI: 10.3390/s21196575.

- Rouard, S., Massa, F. and Défossez, A. (2023) ‘Hybrid Transformers for Music Source Separation’, in ICASSP 23. [No page numbers available].

- Van Erp, J.B.F. (2005) ‘Vibrotactile Spatial Acuity on the Torso: Effects of Location and Timing Parameters’, in First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics Conference, pp. 80–85. DOI: 10.1109/WHC.2005.144.