This internship aims to design and create a swarm of robots (3-5 robots), desk-sized, capable of providing haptic feedback to a user in virtual reality.

Thus, the robots must be compact, precise, and swift enough to ensure the user is not disturbed by their movements. Wireless control of the robots and swarm management algorithms to prevent collisions also need to be developed.

The internship also involves the hardware development of an interface, taking the form of a cube or a small table, generating haptic feedback for the user. A wireless link for controlling the haptic feedback is mandatory.

This platform is derived from the principles of the robots, allowing for simplification and expedited development.

Overview

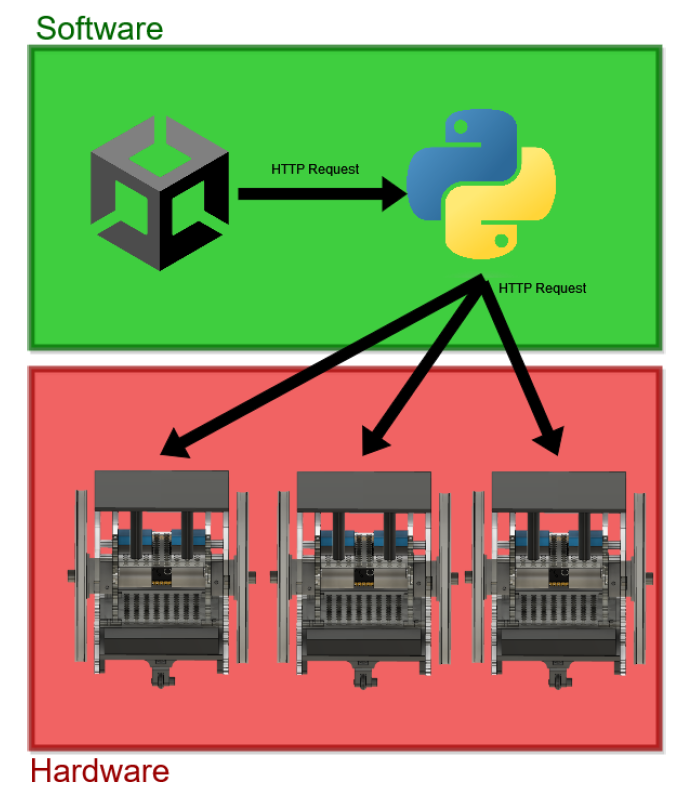

Creating the swarm of robotic haptic interfaces was divided into two parts. The hardware part includes the robots, their power supply, and their method of communication with the computer. The software includes an API for controlling the robots and a Unity application for the VR aspect and user interaction.

We decided to develop the entire project on our own, with a DIY (Do It Yourself) approach, to have control over all the elements of the architecture.

Firstly, we will explore the software part (the API and the Unity interface) and then the hardware part, including the various iterations of the robots.

Software

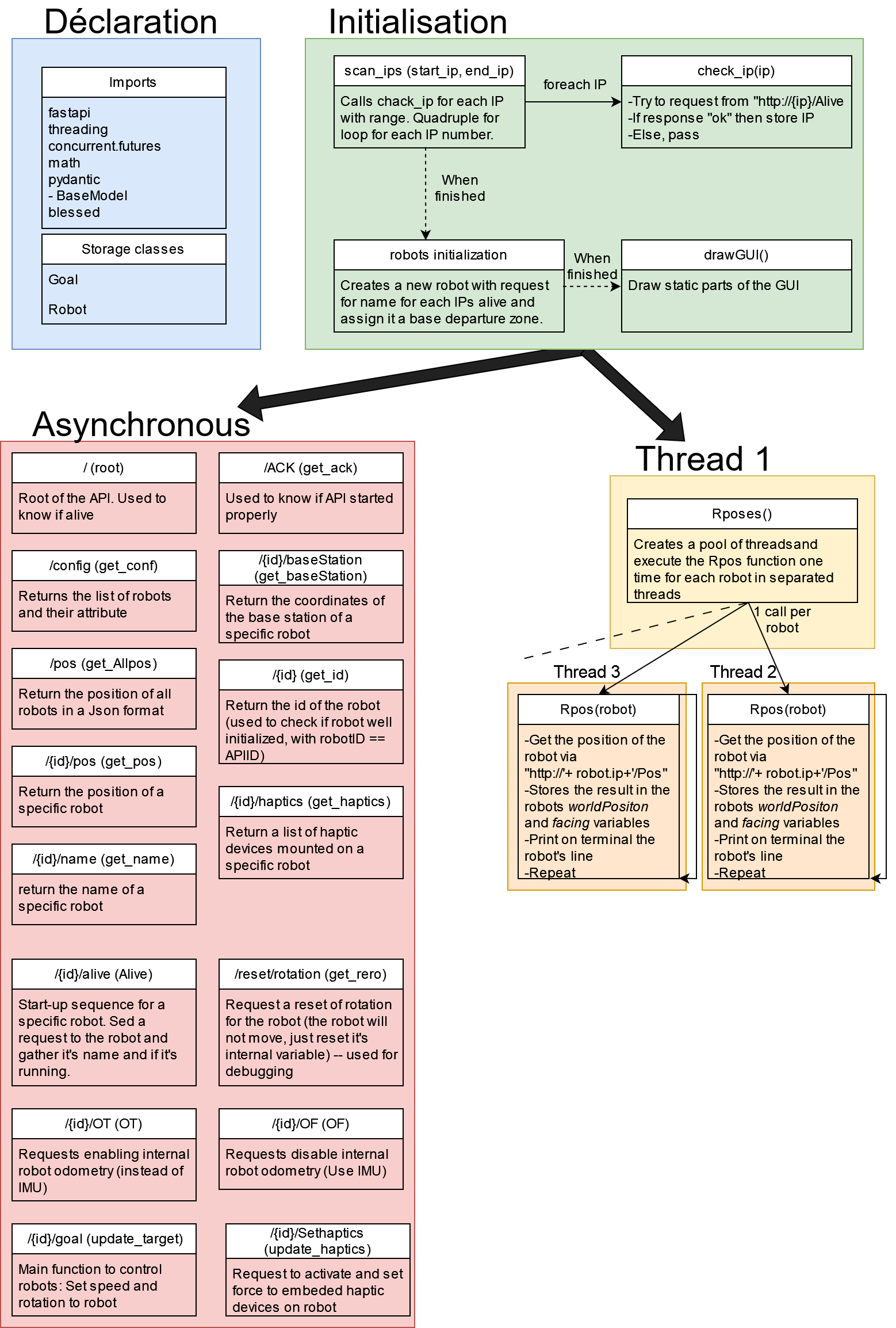

We used Unity and FastAPI to control the robots. This project was coded in C# and Python for the software.

The API serves as the interface between the robots and the Unity application. It is designed to support an infinite number of robots theoretically. (The only limitation is hardware-related, specifically the network throughput of the computer and access point.)

This project uses the Unity engine to create the VR interface between the user and the robots. We utilized OpenVR to manage the virtual reality aspect. This tool allows for coding for Oculus (we use a Meta Quest 2) and Steam VR, enabling Vive trackers for measurements.

To create a user experience,we developed a game based on the Minesweeper principle. A grid of virtual buttons (referred to as Pods) will be placed on a table. A pod is defined as the central element and is assigned a value of 5. The other pods around it have decreasing values as they move away from the central pod. The user will then touch the pods, and their values will be transmitted through varying-intensity haptic feedback located on the robot, which will position itself at the corresponding pod.

Hardware

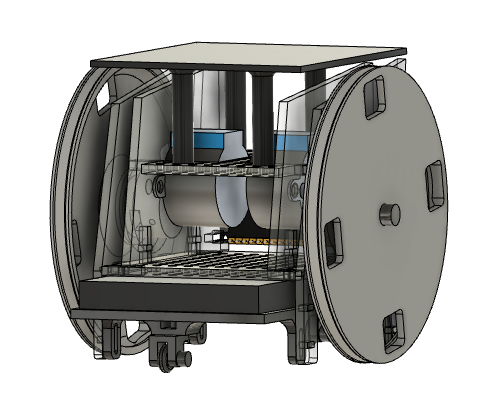

During the internship, we created numerous iterations of small cubic robots acting as platforms to house haptic interfaces. We used Fusion360 to model the system, and 3D printing and laser cutting to bring it to life.

For the realization of the robots, we had several constraints to adhere to :

- Desktop-Scale System : To simplify the user interaction environment, the system must be scaled to a desktop.We decided to aim for a size where each robot can fit within a 10x10x10 cm cube.

- Wireless Robots : The system consists of multiple robots with autonomous trajectories ; each robot should have its power source and receive instructions without physical connections. This is mainly to avoid dealing with cable entanglement in the algorithms.

- DIY Spirit : We want to create the project in a Do It Yourself spirit. Designing the system ourselves instead of buying a pre-made one and making it easy to fabricate for someone with limited robotics knowledge.

Bibliography

- Elodie Bouzbib et al. « "Can I Touch This ?" : Survey of Virtual Reality Interactions via Haptic Solutions ». In : 32e Conférence Francophone sur l’Interaction Homme-Machine. 2021, p. 1-16.

- Lung-Pan Cheng et al. « Sparse Haptic Proxy : Touch Feedback in Virtual Environments Using a General Passive Prop ». In : CHI ’17 : CHI Conference on Human Factors in Computing Systems. Denver, Colorado, USA, 2017, p. 3718-3728.

- Ryo Suzuki et al. « Augmented Reality and Robotics : A Survey and Taxonomy for AR-enhanced Human-Robot Interaction and Robotic Interfaces ». In : CHI ’22 : CHI Conference on Human Factors in Computing Systems. New Orleans, LA, USA, 2022, p. 1-33.

- Elodie Bouzbib et al. « CoVR : A Large-Scale Force-Feedback Robotic Interface for Non-Deterministic Scenarios in VR ». In : Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology. 2020, p. 209-222.

- D. Rosenfeld et al. « Physical objects as bidirectional user interface elements ». In : IEEE Computer Graphics and Applications 24.1 (2004), p. 44-49.

- Ryo Suzuki et al. « HapticBots : Distributed Encountered-type Haptics for VR with Multiple Shape-changing Mobile Robots ». In : UIST ’21 : The 34th Annual ACM Symposium on User Interface Software and Technology. Virtual Event, USA, 2021, p. 1269-1281.

- Y. Yokokohji, J. Kinoshita et T. Yoshikawa. « Path planning for encountered-type haptic devices that render multiple objects in 3D space ». In : Proceedings IEEE Virtual Reality 2001. 2001, p. 271-278.

- Binsted G. et al. « Eye–hand coordination in goal-directed aiming ». In : Human Movement Science 20.4-5 (2001), p. 563-585.

- Game Manual. Odometry. https://gm0.org/en/latest/docs/software/concepts/odometry.html

- Julien Cauquis et al. « ”Kapow!” : Studying the Design of Visual Feedback for Representing Contacts in Extended Reality ». In : VRST 2022 - 28th ACM Symposium on Virtual Reality Software and Technology. Virtual/Tsukuba, Japan, 2022.

- António Silva, Maria Teresa Restivo et Joaquim Gabriel. « Haptic device demo using temperature feedback ». In : 2013 2nd Experiment@ International Conference (exp.at’13). 2013, p. 172-173.